Why is the latency between users and cloud services important? That’s a good question. The answer is twofold:

- Cloud services and SaaS adoption: most business apps have already shifted from on-premise/datacenter based delivery to SaaS / cloud based applications. The Covid pandemic accelerated that change. This means that a poor performance on SaaS and cloud based applications translates into a massive productivity loss for most organizations. Do you wonder if it is true? Take a look at this article.

- Latency drives user experience or performance: this is quite simple, the longer the latency between a user and the different elements of an application platform, the worse the user performance is. If you have any doubt, I recommend you take a look at this article.

So what makes the latency between users and cloud / SaaS platforms shorter or lonrger?

How does network latency work?

If we go back to the basics, network latency is the delay for a packet to go from one point to another, for instance from a user to a cloud platform (and back).

What drives the level of latency on networks?

- Propagation: understand the distance between the two ends of the parties.

- Network processing and serialization: put in simple words, the amount of time needed to go through active network devices like routers, firewalls, load balancers, proxies etc… which depends on (1) the number of devices on the path, (2) the processing they are doing and (3) their level of performance

- Queueing: in case of congestion on one of the devices, packets will be placed in a queue, delaying the processing and hence increasing latency.

If you need further details on that matter, please read this article.

We end up with 3 main criteria: distance between user and server, number of network devices on the path, individual load/performance on each device on the way.

Understand where users are and where they are connecting to?

When it comes to evaluating latency between users and cloud, the first element is understanding the physical distance between them and hence:

- Where are my users located?

- Where is each cloud service / SaaS redirecting them?

In this article we will consider that you are aware of your users’ location.

The main question here is to which destination SaaS platforms are redirecting your users.

How do SaaS and Cloud platforms redirect users?

Most platforms proceed as follows:

- use your user’s IP address

- Look it up in a geolocation database to evaluate their position (services such as MaxMind, IPWhois.io or others)

- Redirect them to the closest node available using the DNS service. Their DNS records are resolved in a different way depending on the location.

Once this is done there are two main items that can affect user to cloud latency negatively:

- The lack of capillarity of your SaaS provider or their subprocessors’

- Errors in the geolocation process

Understanding the capillarity of your SaaS provider’s platform

This is quite simple: from how many geographical points does your SaaS provider (or their cloud sub-processors) provide their application? How close can it be to your users? Let’s take two examples:

Running their app from their own data centers

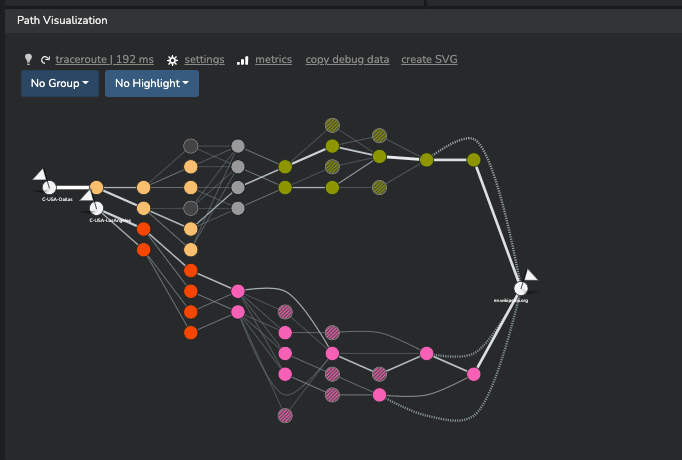

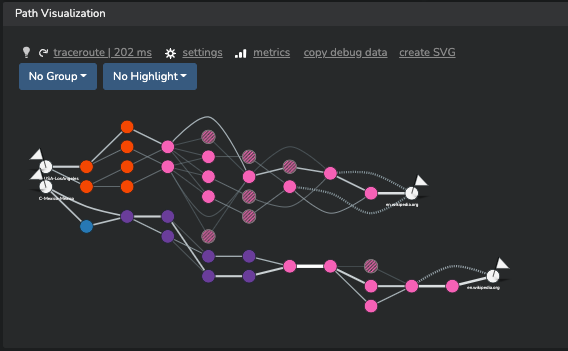

In the example hereunder, we analyze the network path to en.wikipedia.org from multiple locations; Wikimedia (who hosts the famous Wikipedia) operates from multiple data centers around the world. As you will see, depending on where we test the network path:

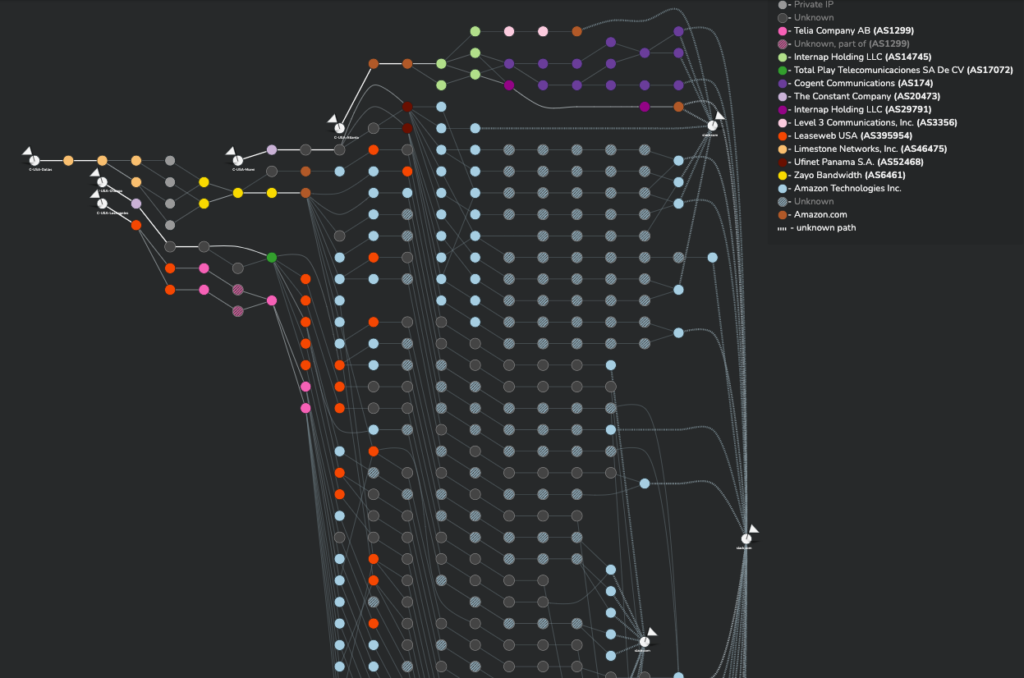

1. Testing from multiple locations in the same country (here the United States), we get the same DNS resolution for en.wikipedia.org and the path terminates in a single location:

2. Testing from multiple locations in different countries (and here different continents), we get distinct DNS resolutions, driving our network paths to end in different datacenters.

Running an application in large cloud hosting environment

Let’s assume our SaaS / cloud service provider uses a large scale cloud infrastructure.

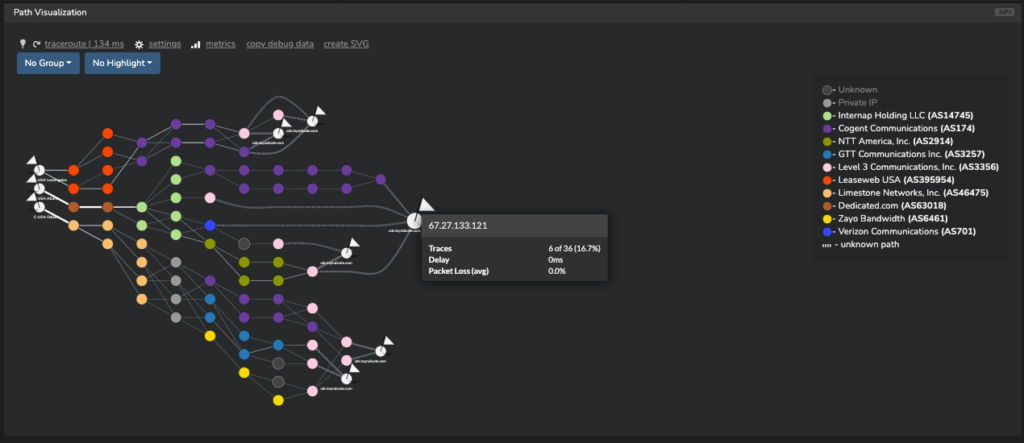

In the example hereunder, we test the network path from multiple locations in the USA, to a single hostname. The provider is hosting their app in AWS (Amazon Web Services).

As you can see, there are multiple DNS resolutions taking place and depending on the time and the location of our test points we get redirected to multiple IP addresses inside AWS’s infrastructure. Depending on the location of these hosts, this can translate into latency variation that can affect user experience significantly.

Let’s take a second example of a hostname corresponding to a CDN (Content Delivery Network) as an example.

All cloud platforms are not made equal

All hosting platforms have a given capillarity and geographical coverage. A lack of coverage may not be an issue for a given organization and be a big deal for another: it actually depends on how far it matches the geographic distribution of their users.

Is geolocalization working right for your users?

The basis of all this is to translate each user’s IP address into a geographical position. Geolocation is not flawless. It can simply go wrong. Certain IP addresses are poorly reported into Whois databases like RIPE and some operators do not maintain the geographical data for all of their prefixes in a correct way.

Geolocation errors

This is the most common consequence for that lack of accurate data: users have their geolocation wrongly interpreted and get redirected further away from their geographical location.

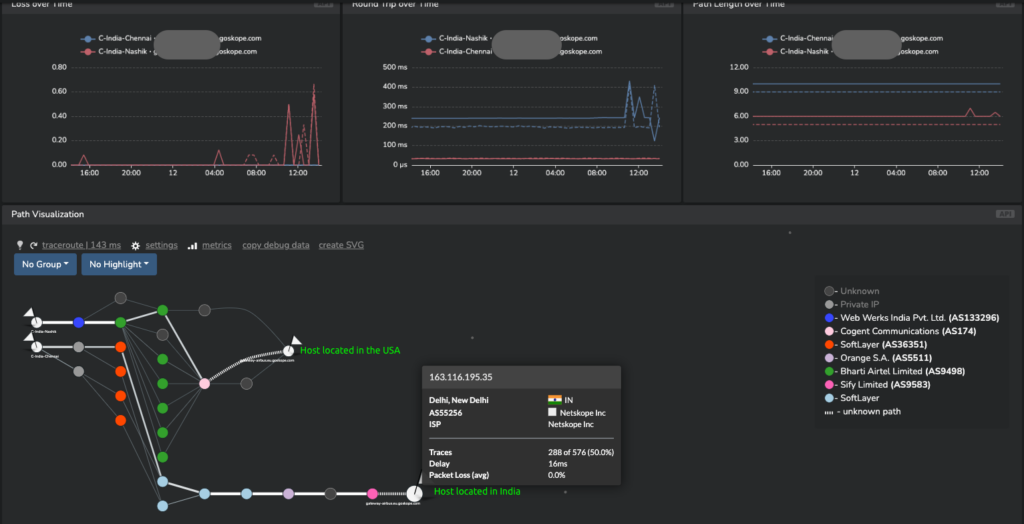

Here is an example: two test stations are located in India. They are connected through two distinct operator (respectively Reliance Jio and Airtel, #1 and #2 operators nationally). While one of the test stations trying to reach a secured cloud gateway service gets redirected locally, the other one is redirected to a node located in the USA.

Frequent changes in redirection

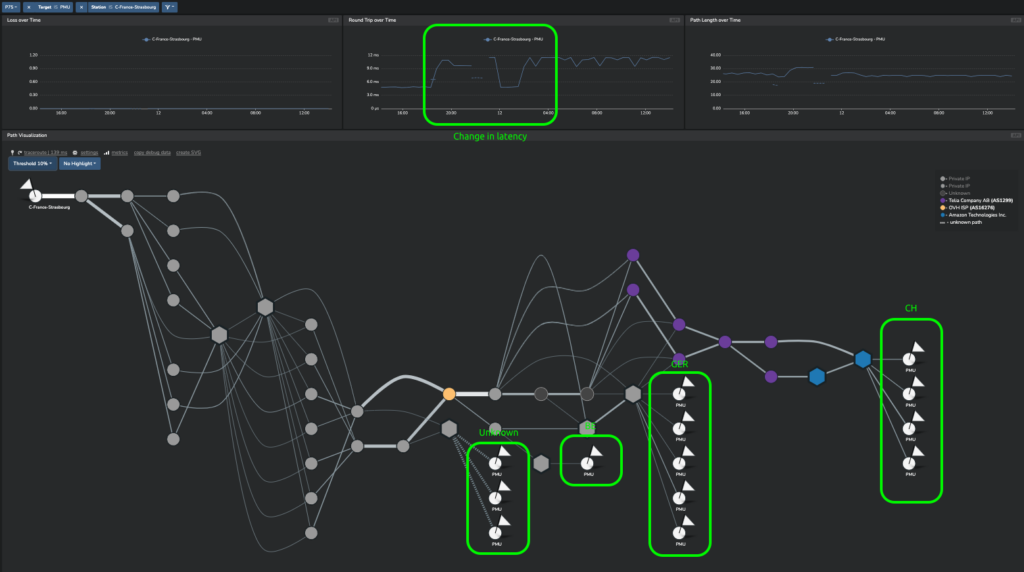

We can also observe that the redirection of a specific IP address will change through time (within a day) to hosts hosted in different countries for the same FQDN (hostname). Take the example hereunder for pmu.fr:

Last important factor: the length and the quality of their network path

Assuming your users are lucky and are redirected to a node which is the closest to them (which hopefully remains the usual case), the network conditions between the user and the server can also vary significantly and affect latency.

Every operator which is part of the network path can affect latency by changing the route, having routers showing signs of congestion and adding more latency to the overall path.

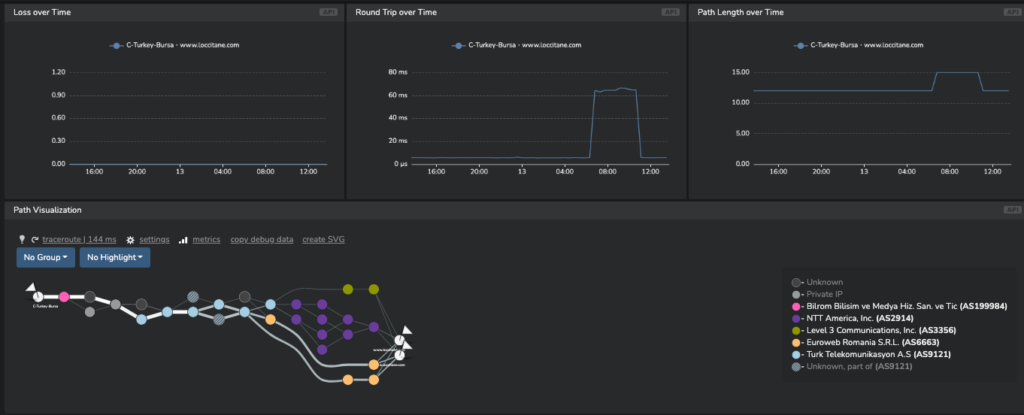

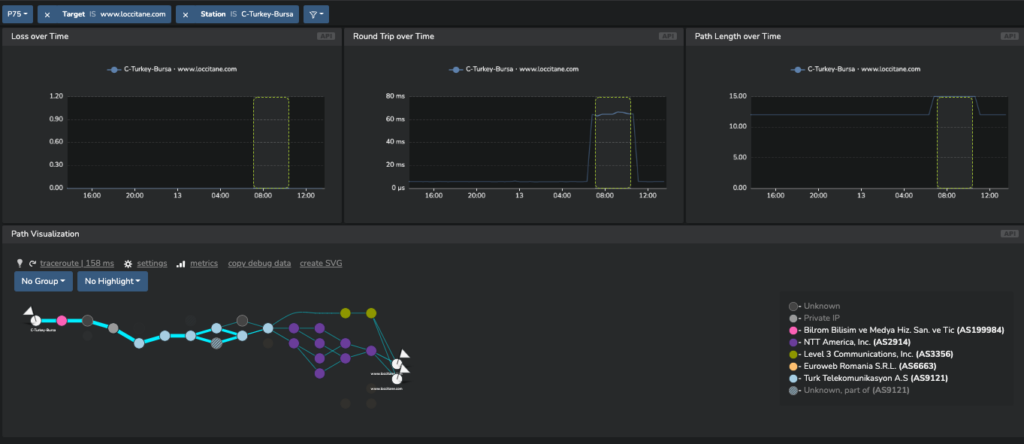

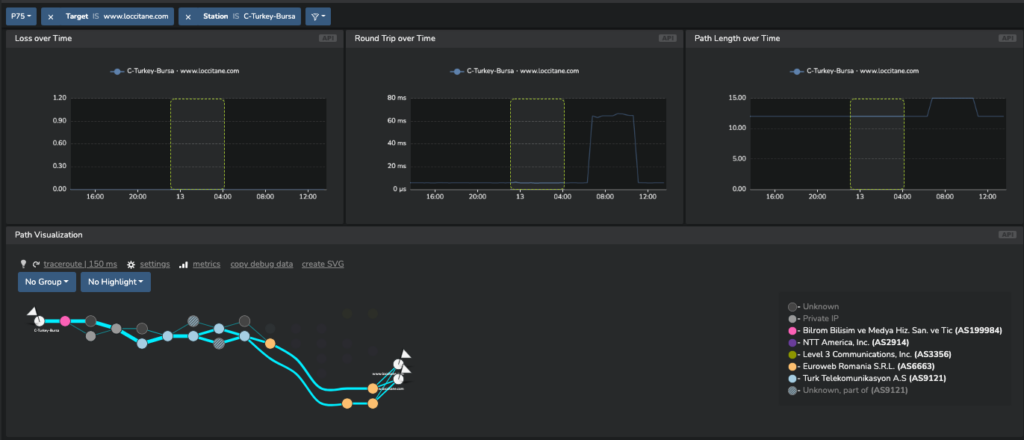

If we consider the example hereunder, we see a spike of latency from less than 10ms to over 60ms for a test point located in Turkey reaching a hostname hosted by CloudFlare.

By looking at the detailed path at the two time intervals, we can see that the route which is taken is different:

And it results in a massive difference in latency.

How should you monitor user to cloud latency?

Keeping track of where your users are, where their SaaS apps redirect them and what is the resulting latency is key to maximizing their user experience.

Kadiska provides a service to monitor all these parameters; to discover how it is done, follow this link!