Latency and jitter are common terms when it comes to network monitoring. Network engineers often consider the “latency” as one of the major network performance metrics. When dealing with videoconferencing tools like Microsoft Teams, Zoom or Google Meet, “jitter” is also considered a key performance driver. But what is the difference between the two and what should be their respective baselines to ensure good performance?

What does “latency” mean?

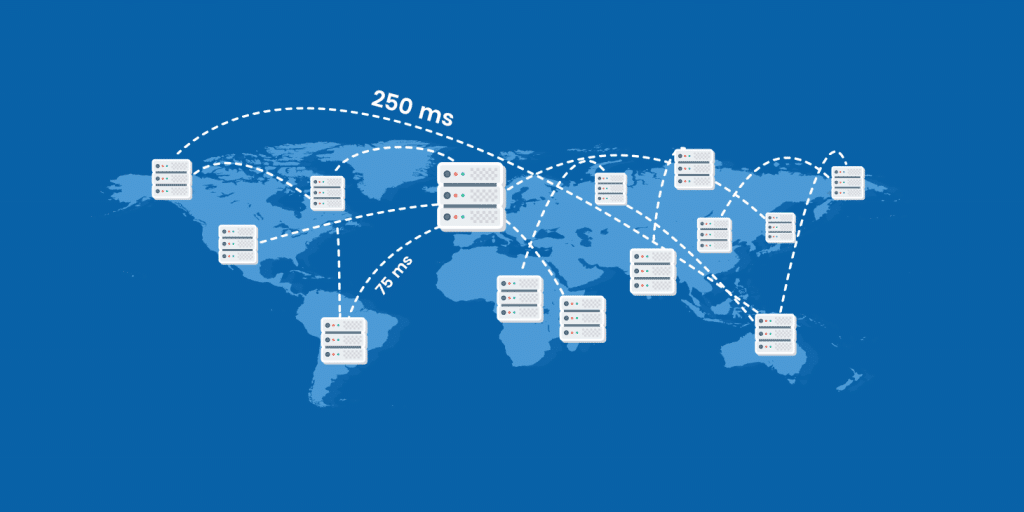

In networking, we can define “latency” as the time it takes for a packet to traverse a network from a source to a destination. It is driven by a lot of different factors, as explained in details in our article “What is network latency? How it works and how to reduce it”. Obviously, network congestions will have an impact on the network latency. This is why it is so important to measure it when monitoring network performances.

As our article “Why network latency drives digital performance” explains, network latency is a key performance driver for digital services. This is especially true for interactive applications like voice/video communications. For this kind of flows, a network latency reaching 150ms will be noticeable by a human being!

There are different ways to measure the network latency. Generally we differentiate the latency calculation from a source to a destination (the one-way latency) from the latency calculation from a source to a destination and back (two-way latency, or RTT – Round Trip Time).

What does “jitter” mean?

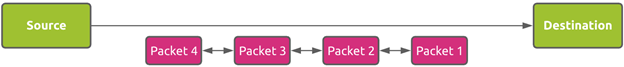

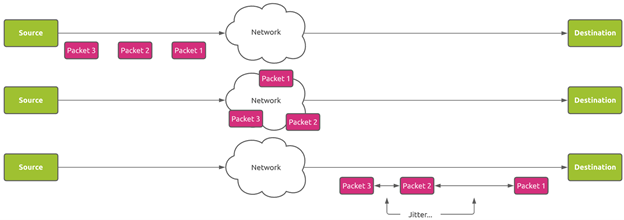

In an ideal networking world, packets are sent from a source to a destination without any loss, in the same order, and in the same even-paced intervals.

In reality though, the path between the source and the destination may be strewn with pitfalls. For example, network congestion may occur, which can lead to packet loss, introduce additional delays or dynamic route changes. As a result, the packets may get to the destination in chunks or even in the wrong order!

We define jitter as the variation of the network delay between consecutive received packets. Note that each word has its importance. This definition clearly states “… between consecutives received packets”. This implies that to be accurate we should calculate jitter on a per-flow basis. Considering the difference between average network latency from different communications is not a proper definition of jitter!

Jitter is not always a problem. Standard TCP-based applications can usually cope with it. But jitter is a real key performance driver for interactive voice/video communications. Exceeding 20 to 30ms of jitter will affect the voice/video quality. Techniques to reduce the jitter exist. One of the simplest methods consists of buffering the packets at the final destination point so that each client sends all packets to the application at the same pace. Nevertheless, buffering means increasing the latency! So there is a trade-off to find.

Takeaway

Network latency is a key performance driver for any kind of digital service and is as such one of the main performance metrics you should monitor when delivering digital services.

Jitter at the other hand is extremely important in the context of high interactive applications like videoconferencing solutions. It is much more complex to measure as it requires getting the details of the packets delivery mechanism inside specific flows.

In public and hybrid networks, one of the goals of network monitoring is to measure latency and jitter, but more importantly to understand what is the network path we are using and which part of the path is generating latency and jitter.

To find out more on network path monitoring, check this article.