In this article, we explain the main challenges faced by the HTTP/1.1 protocol that have led to the adoption of HTTP/2. We also present some of the major features added by HTTP/2 that address the limitations of older protocols.

We’ll first focus on the HTTP protocol itself. So we won’t introduce an important aspect that is tightly linked to web transactions: security! Indeed, with the adoption of new standards like HTTP/2 also emerged more secure and performant security protocols like TLS 1.3. We’ll address this important topic in another series of articles.

According to W3Techs, 50.4% (numbers from March 2021) of all worldwide websites are using HTTP/2 protocol today!

Major Cloud Delivery Networks (CDNs) have HTTP/2 enabled across their networks:

- keyCDN: 68% of all HTTPS traffic was HTTP/2 as of April 2016

- Cloudflare: 52.93% of all HTTPS traffic was HTTP/2 as of February 2016

- Akamai: Over 15% of all HTTPS traffic on the Akamai Secure Network (ESSL) was HTTP/2 as of January 2017. This percentage is low compared with other CDNs because HTTP/2 is enabled opt-in; the above CDNs have enabled it in an opt-out basis.

As from 2016, major cloud providers like Amazon, Microsoft and Google sequentially integrated HTTP/2 support in their different offerings, like load balancing services, PaaS-based Web services and Software Development Kits.

Today, about 96.7% of all browsers available on the market support HTTP/2 protocol.

Main limitations of HTTP/1.1

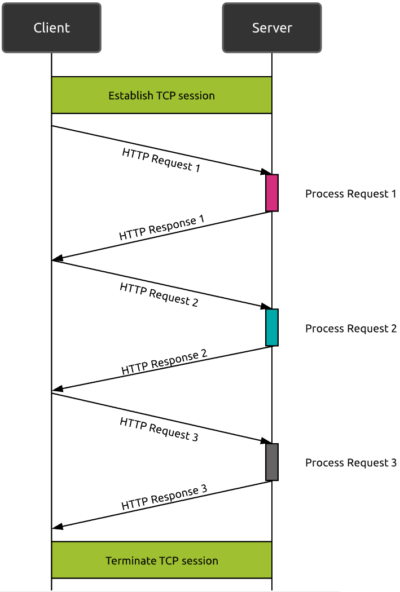

Problem 1: only one request at a time

HTTP/1.1 has been around for more than 20 years. Based on the TCP transport layer, it is a request-response protocol : the web browser requests a resource and waits for the response. With HTTP/1.1, even if one HTTP request does not require a dedicated TCP session (as it was the case in HTTP/1.0), within a specific TCP session, only one HTTP request can be sent at a time. This makes HTTP/1.1 inefficient facing today’s complexity of websites.

The solution proposed by HTTP/1.1: “pipelining”

The first attempt in HTTP/1.1 to solve this problem was by introducing the concept of “pipelining”. Pipelining consists of sending multiple requests in parallel without waiting for the full completion of the initial request.

With pipelining, even if the server can receive multiple requests, it still must handle the responses in the requests sequence order, potentially blocking the whole process in case one request get slow. This introduced a potential problem called “Head Of Line Blocking” (HOLB).

HTTP/1.1 proposes a workaround that consists of allowing multiple parallel TCP sessions (typically around 6 but the number depends on the web browser). This does not completely solve the HOLB problem, but limits its impact to specific sessions.

Problem 2: duplication of data

Another problem with HTTP/1.1 is the duplication of data across multiple parallel requests. Since HTTP is a “stateless” protocol, sending requests in parallel through different TCP sessions implies sending the same HTTP header parameters multiple times (cookies and other parameters like “User-agent”).

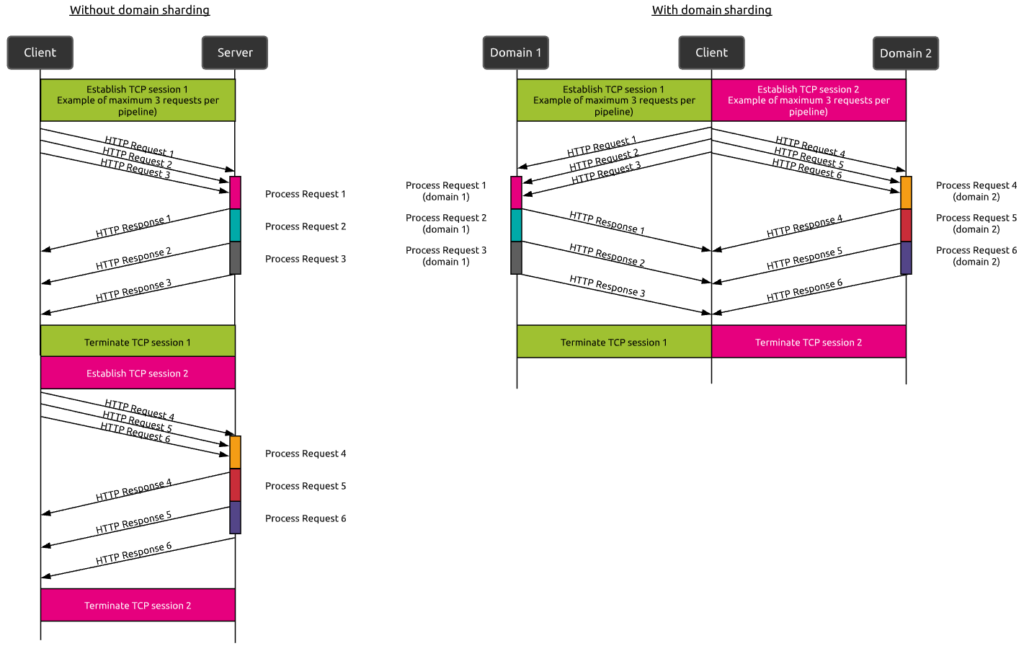

This led to the development of additional techniques like “image sprites” and “domain sharding”.

The solution proposed by HTTP/1.1: “image sprite” and “domain sharding”

-

- “Image sprite” corresponds to combining multiple images (from a CSS for example) into a single one so that multiple images can be sent in one request-response transaction.

- “Domain sharding” tends to solve the problem of limited number of concurrent TCP sessions in a pipeline (example of maximum 3 sessions is shown on the figures hereunder). With this technique, the requests for resources are splitted over multiple domains to increase possible parallel TCP connections.

How does HTTP/2 differ from HTTP/1.X?

HTTP/2, ratified in May 2015 by the Internet Engineering Task Force (IETF) under RFC 7540 is the next-generation successor of HTTP 1.1.

The main goals of this initiative were to address perceived problems in performance and efficiency, so the HTTP/2 can boost web performance. It also provides enhanced security as well.

We can summarize the major improvements of HTTP/2 two categories (if we exclude security aspects):

- The way the server transfers data to the client

Binary data formatting and header compression are ways to optimize this data transfer - The way communication between a client and a server happens

Multiplexing and server push techniques mainly improve it.

Data formatting

As opposed to HTTP/1.1, which keeps all requests and responses in plain text format, HTTP/2 uses the binary framing layer to encapsulate all messages in binary format, while still maintaining HTTP semantics, such as methods and headers. At the OSI application layer, messages are still created in the conventional HTTP formats, but the underlying layer then converts these messages into binary. This ensures that web applications created before HTTP/2 can continue functioning as normal when interacting with the new protocol.

The conversion of messages into binary allows HTTP/2 to try new approaches to data delivery not available in HTTP/1.1. This is the foundation that enables all other features and performance optimizations provided by the HTTP/2 protocol.

Header compression

Transferring data from the server to the client consumes bandwidth and takes time. Restricted bandwidth or huge data payload to transfer will of course impact overall performance. So any way to optimize the required data to be transferred is an asset. One good example is caching, as explained in our article “Improve web performance with content caching“.

HTTP supports compression of data mechanisms for ages. Headers however are sent as uncompressed text and a lot of redundant data are sent with each transaction.

HTTP/2 uses the HPACK specifications to compress a large number of redundant header frames. For this to happen, both client and server maintain a list of headers used in previous requests. HPACK compresses the individual value of each header before it is transferred to the server. This latter then looks up the encoded data in a list of previously transferred header values to reconstruct the full header information.

Multiplexing

In our article “Why network latency drives digital performance“, we explain how TCP impacts web performances. Reducing the number of required roundtrips is a key performance driver, especially for long distance communications.

With HTTP/1.1, there is a limit in terms of number of web transactions per TCP session. As we have seen previously, techniques like pipelining and domain sharding help reduce the performance impact multiple TCP sessions establishments will have. But these do not completely solve the problem. Setting up TCP sessions consumes resources and time!

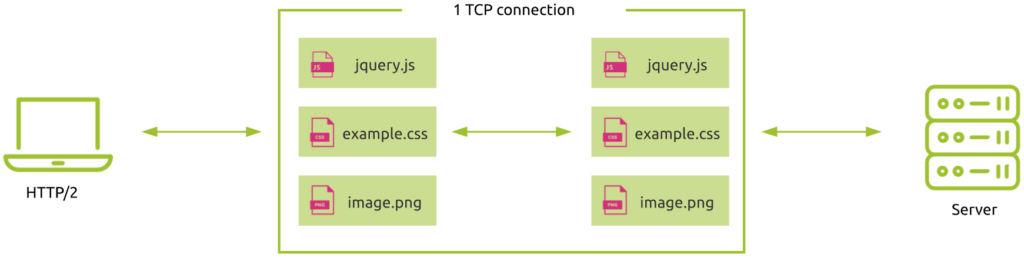

HTTP/2 introduces the multiplexing technique to address this challenge.

Instead of using one dedicated TCP session per requested element (HTTP/1.0) or having to handle requests in order (HTTP/1.1 with pipelining), this technique allows the browser to query all elements within the same TCP connection:

This technique solves the problem of Head Of Line Blocking and makes domain sharding unnecessary.

Considering a network latency between New York and Sydney of about 80 ms, using HTTP/2 reduces the network time by 3 roundtrips (2 TCP handshakes) when compared to HTTP/1.0. This corresponds to a 240ms time saving!

Server push

Loading resources in the browser is hard !

As we’ve seen previously, performance is tightly coupled to network latency. Furthermore, the event of execution is extremely important. For example, loading a JavaScript blocks the rendering of a webpage (the HTML parsing process is stopped until the JavaScript is loaded). This is also the case for CSS (Cascading Style Sheets) resources.

So two of the major questions in web applications performance optimization are: “How can we load resources more efficiently, and what are the most important resources to load first?”

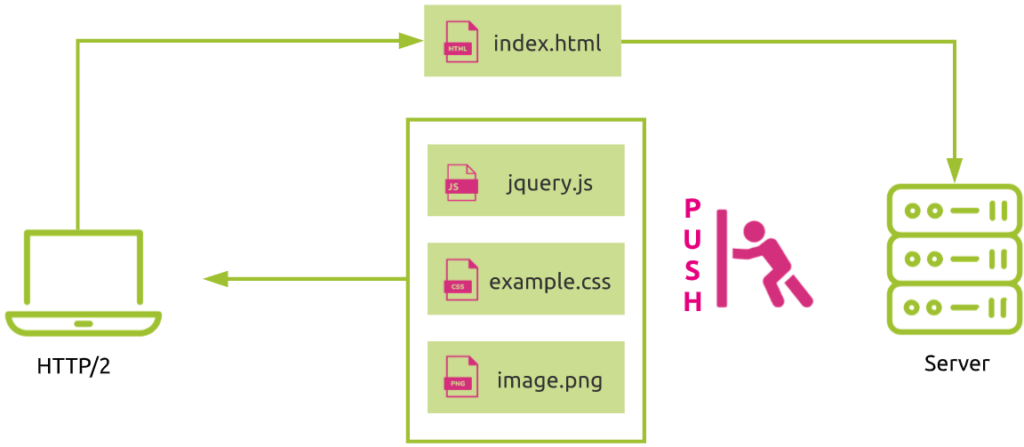

In HTTP/2, a server can anticipate the fact that the browser will need additional resources later by proactively sending them without waiting for additional browser’s requests. This is called “Server Push”.

In the following figure, requesting the index HTML page automatically triggers the sending of required JavaScript, stylesheet and image by the server, without any additional request from the browser.

Using server push can greatly improve web performance.

Is HTTP/2 the ultimate solution?

From the previous paragraphs, you could conclude that using HTTP/2 will be the ideal solution to boost web performance in all types of scenarios. Is it really the case?

Let’s consider two cases that illustrate situations where HTTP/2 may not be the right protocol to use.

Using multiplexing in network congestion conditions

Let’s assume that you use the multiplexing technique while experiencing network congestions.

Since multiple web transactions are transmitted over the same TCP connection, they are all equally affected by this degradation (packet loss and retransmission), even if the lost data only concerns a single request!

So here you see how great techniques that should improve performances can in some cases make the situation worse.

This is where TCP protocol comes to an end and why other new protocols (QUIC and HTTP/3) use UDP as transport layer, but this is another story addressed in future articles.

Using server push in combination with caching techniques

With server push, the server proactively sends resources to the client’s browser.

Nevertheless, in case the browser already has the data in its cache, is it a good idea to send them anyway? Of course not! It would unnecessarily consume network and systems resources.

The main problem here is that the server does not have any knowledge of the client cache state. Among other workarounds, one solution to this problem is called “Cache Digest”. A digest is sent by the browser to inform the server about all resources that it has available in its cache, so that the server knows it does not need to send them.

Unfortunately, this kind of technique is still subject to browsers inconsistencies and lack of server support.

Wrap-up

Web technologies continuously evolve to offer the best user experience possible.

HTTP/2 brings major improvements compared to the previous HTTP/1.X standard. New protocols like HTTP/3 (previously called QUIC – Quick UDP Internet Connection) will definitely play an important role in web development in the future.

Nevertheless, as we’ve seen in this article, the devil is often hidden in the details. Great capabilities can sometimes lead to unexpected results. The efficiency of techniques described depend on external factors like network conditions and browser/server support.

This is why it is so important to be able to monitor not:

- only the web performances themselves in terms of protocols and transactions visibility,

- but also how clients connect to web resources (locations, network performance, …).

This is what Kadiska does when delivering an end to end experience monitoring: find out more!