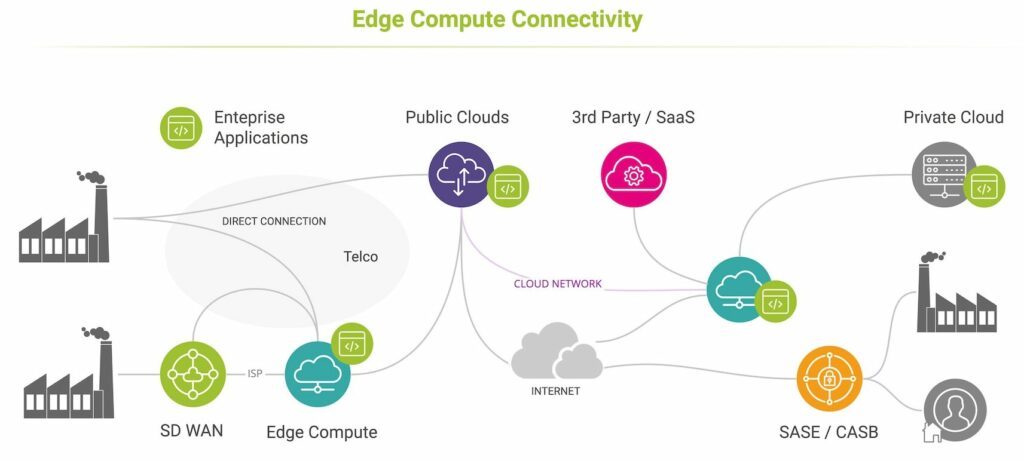

With a mix of both edge cloud and connectivity providers, it can be difficult to pin down performance guarantees or to quickly resolve latency or application issues. Imagine purchasing multi-provider edge compute services from a managed service provider who offers connectivity via multiple wholesale and regional telecom service providers over a managed SD WAN!

This reality makes it difficult to purchase an end-to-end assured, SLA-backed edge compute service – not only because many parties are involved, but also because it is never easy to determine where problems originate.

This combination of multiple service providers, hosting locations and domains make detecting, isolating and resolving performance degradations an essential part of a successful edge compute strategy. IT operations and performance teams need to consider what can impact application performance to ensure they select the best providers, validate deployments and resolve issues quickly.

This blog post explains the many ways latency can be impacted when accessing cloud resources.

What Impacts Edge Compute Performance?

Edge computing is designed for high performance, but by definition small, regional data centers and ‘pico clouds’ like those provided by the AWS Snow family have limited resources, resiliency and scalability. It’s critical that workloads are managed and monitored. You can learn all about the origins of edge compute, how it’s deployed and the top applications for edge computing in this related post.

Here are the top five reasons to monitor edge computing performance:

- Ensure workloads don’t overwhelm limited edge compute capacity. This can be easily detected by server response and transaction times, transfer rates and application errors.

- Detect edge hosting location changes. If dynamic orchestration is employed, it’s also important to know if workloads have changed location or moved to public clouds when local edge compute capacity is saturated.

- Get outage alerts. Edge compute performance can be impacted by outages in either the edge cloud or connectivity domains, both of which are highly dynamic. Local access networks are less resilient and less diverse than the high capacity networks enterprises use to access large-scale cloud data centers.

- Identify congestion caused by large transfers. High bandwidth utilization between enterprise sites and edge cloud locations can result in packet loss and latency as traffic is retransmitted. This is especially common in video-related analytics applications (e.g. machine vision, face and object detection) which combine large volumes of data with low-latency performance requirements.

- Detect abnormal latency. Increased latency can originate in compute or application layers, network configuration, path changes or connectivity setup delays introduced by DNS and TLS. Where SASE / CASB is used to manage cloud access, connectivity can be impacted as traffic is redirected through secure proxies before routing along the network path between them and edge compute nodes.

- Determine how third-party and remote services are impacting performance. Where edge applications use services hosted elsewhere they can be affected by transaction and processing delays introduced by connectivity to, and performance of, these remote resources. You can learn more about hybrid connectivity performance challenges in this blog article.

How Do You Monitor Edge Compute Performance?

With so many variables that can impact performance, it’s critical to have full visibility into the full application delivery chain from client to edge compute application.

Here are some edge compute performance monitoring essentials:

- Application and compute performance and responsiveness – transaction and server response delays and errors.

- Edge compute reachability and reliability per application and user location.

- Network routing, path length, latency and loss performance between all user locations and edge node locations.

- End-to-end underlay network performance per-hop and provider over time.

- Performance impact of SASE / CASB, DNS and TLS services on latency and local access.

- User experience monitoring for edge-hosted applications dependent on multiple remote hosts, CDNs or third party services.

- Network performance between edge and public / private clouds.

Solutions need to be compatible with a highly dynamic, modern edge cloud infrastructure:

- Lightweight and cost efficient.

- Scalable from regional to global coverage.

- Responsive to changing edge locations, applications and workloads.

- Non-intrustrusive, agent/sidecar-free approach.

- Open APIs to interconnect with orchestration, SD WAN and observability platforms.

See It In Action

Kadiska offers a self-driving digital experience monitoring solution that integrates application and network performance with guided troubleshooting and optimization insight – designed for modern SaaS, cloud and edge-enabled applications.

Take 20 minutes and try it in your network.