The role of networks in application performance in a cloudified environment

Cloudification has changed the way we look at infrastructure and applications: over 70% of enterprise apps are now SaaS applications (source). Most companies leverage multiple clouds and have migrated most of their workloads to public cloud platforms. What is the impact on the delivery of applications to users? What does it mean when it comes to the role of networks in the overall application performance?

Network in the application delivery, before the cloud

Before the shift to the cloud, private networks were at the heart of application delivery in an enterprise context and the internet was driving most of the application delivery for public exposed applications. Things were simple.

The case of private applications

In the “good old days”, users were typically accessing applications hosted in a datacenter from a corporate site connected to a private network via a MPLS connection or a point to point layer 2 connection.

From a network performance standpoint, this was quite easy to manage; things were clear:

- Where the user was seating in the network,

- In which data center the application (mainly monolithic at that time) was hosted

- The network path the traffic was taking

- Which provider was responsible for the performance of that circuit

The case of internet exposed applications

Applications exposed to the internet were harder to control as the user to application connectivity depended on a series of operators’ networks. The application owner had no way to act on most of these network providers.

This got balanced by deploying architecture which structurally reduce the latency between users and applications:

- Hosting in multiple regions to reduce the “distance” between users and application workloads

- Using Content Delivery Networks to get even closer to the users

- Fine tune (or let your hosting provider do it) the BGP policy to optimize the network path from users to applications.

Network in the application delivery and performance in a cloudified and hybrid world

Focusing on Enterprise applications, the use of cloud technologies has changed the very nature of networks and their impact on the application delivery.

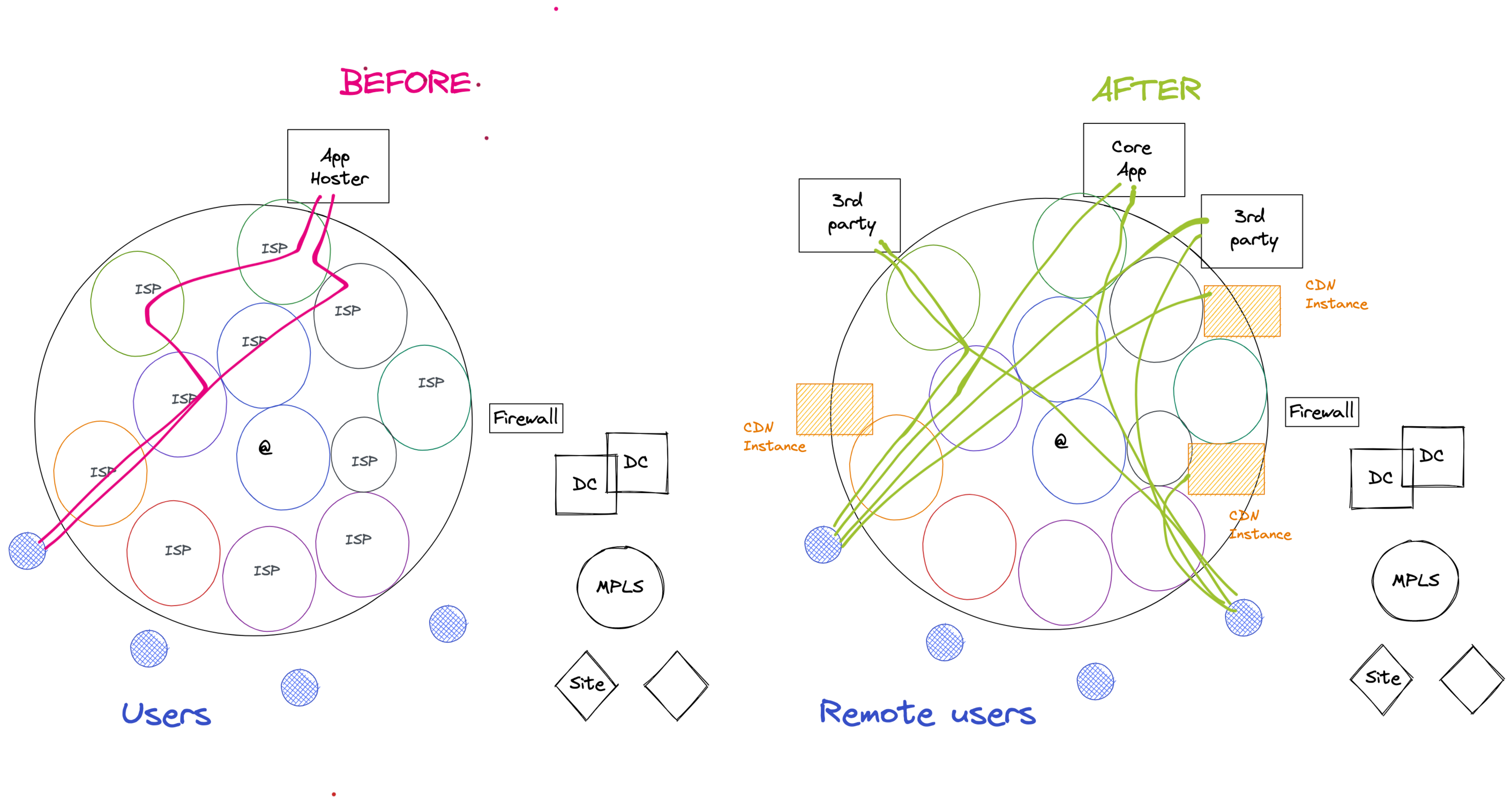

The network in application delivery: user to application path

The cloudification process has deeply impacted the infrastructure the application traffic traverses from a user to an app. The user will no longer simply go across a private network (LAN then WAN to reach a datacenter controlled by the same entity).

There will be a variety of options:

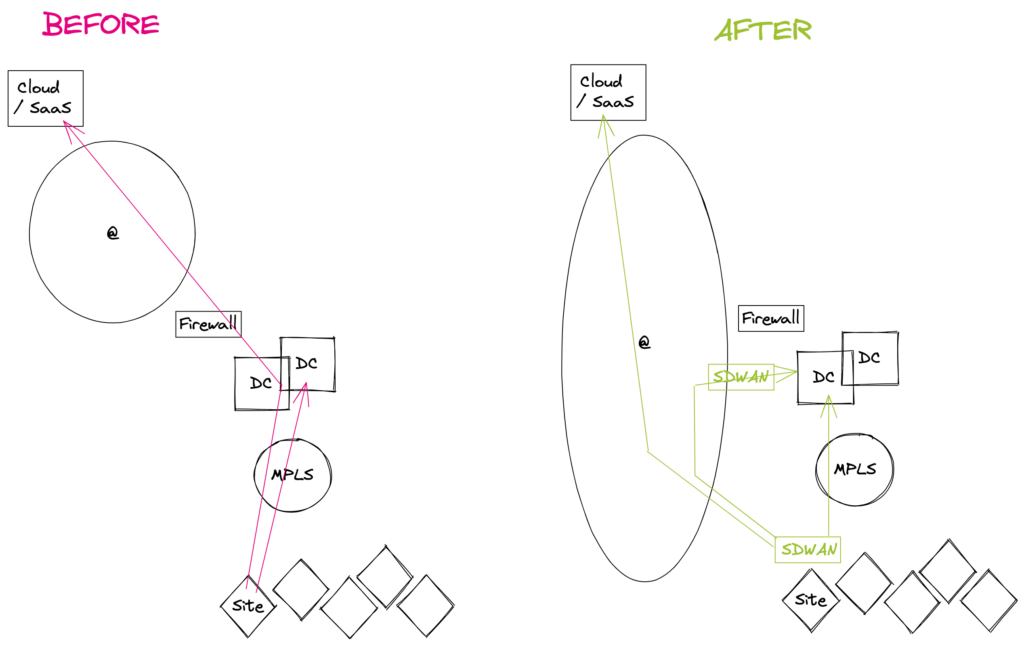

-

SD-WAN to data centers and clouds

The user located in a remote site can be connected to applications through an SD-WAN device which will route the traffic through a variety of network services, named as underlay circuits:

- MPLS connection to a datacenter (and then eventually an internet / cloud gateway to reach SaaS and public cloud resources)

- VPN / overlay tunnel over a public internet connection to a datacenter (and then eventually an internet / cloud gateway to reach SaaS and public cloud resources)

- Local internet breakout to connect to a SaaS

This drives two consequences:

- The latency observed at the overlay level will vary and will be highly dependent on the underlay which is used to convey traffic, the change in path at the underlay level and the overall underlay network performance (check this article for more on this topic).

- Beyond the performance on the SD-WAN overlay, the additional network segments on the user’s end (WiFi or LAN) and on the datacenter / cloud end (cloud gateway, datacenter gateway, cloud connectivity or internet access) will also impact the end to end application performance.

-

Public internet in Cloud/SaaS access

Although SaaS and cloud access are critical resources for most enterprises, the vast majority of them still rely on internet connections to reach them:

- Either having their traffic running on the public internet

- Or by setting up virtual private networks over internet connectivity.

This means that the path taken to reach a cloud or a SaaS platform is subject to path change across multiple operators (unless you own your AS – see this article on BGP and performance – and peer with SaaS / Cloud providers directly).

These path changes or congestion outside of your network will affect the end to end application performance.

-

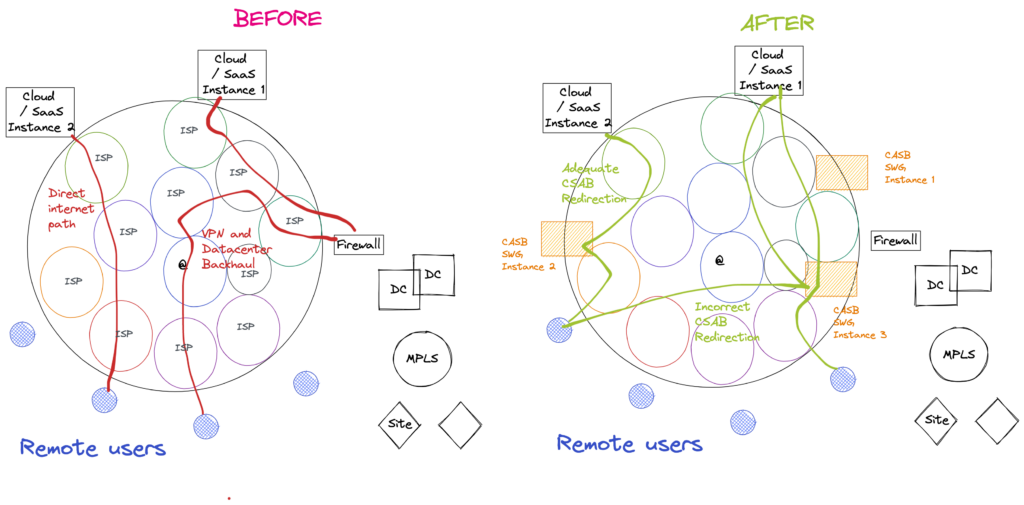

Cloud services in between user and clouds

Secure Web Gateways and Cloud Access Security Brokers now represent a security layer between users and SaaS / Cloud applications.

They are also changing the network path from users to apps. They are subject to:

- Geolocation based redirection to CASB / SWG nodes

- BGP path to these nodes and then from the CASB platform to the SaaS / Cloud app.

It is now critical to evaluate the added latency introduced by these “cloud proxies”.

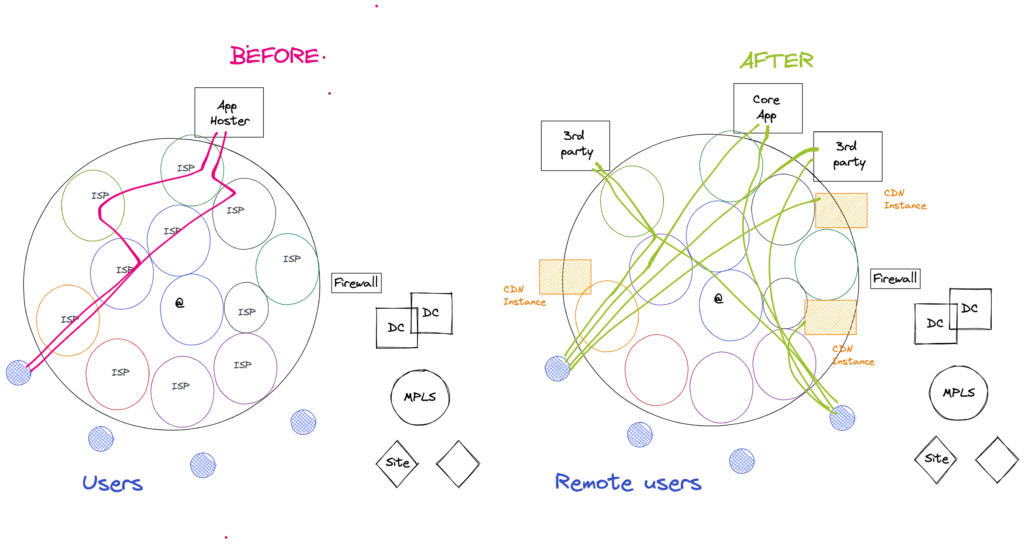

The impact of distribution of applications on delivery to users

In a traditional application delivery, users were connecting if not to a single front end server, at least to a series of front end services located in the same environment. Cloudification has introduced more complexity on that by introducing the following concepts:

- Cloud availability

Clouds make it far easier to distribute front end servers in multiple regions (to reduce the latency between users and front end servers). Although the cloud coverage is still unequal depending on the region, there are many options available to make the frontier of your app available far closer than before by leveraging zones of availability in multiple regions or specialized services such as AWS Global Accelerator.

- CDN

Content Delivery Networks are widely used to distribute static content closer to users (images, css, scripts etc…).

- 3rd party services

Many applications leverage out of the box services provided by third parties to reduce the development load and accelerate the release of new applications. This distributes further the systems that users will call when accessing an application.

The bottom line here is that the number of network connections and the opportunities to make geolocation or redirection errors or to suffer from abnormal network conditions between users and front end for a single application are multiplicated by this new context.

Network inside the application platform

Beside the user to front end segment, cloudification introduces new shunks of network latency where there was none or little in traditional application architectures.

In a traditional, in most cases application resources were concentrated in a single datacenter making the latency between systems limited to the extent of a local network. With cloudification, systems can be distributed across multiple locations, cloud regions or cloud providers.

Datacenter to cloud

It is a classical status for companies executing their cloud migrations to have some application services still being hosted in their legacy data centers and have some other workloads running in the cloud.

Funnily enough, while certain customers rely on VPN on public connectivity and others decide to invest in “direct connectivity” to the cloud, few actually measure the latency between their workload locations and evaluate the impact on the user experience… until they run into a production issue (users complaints or slow data transfer during a migration, as examples).

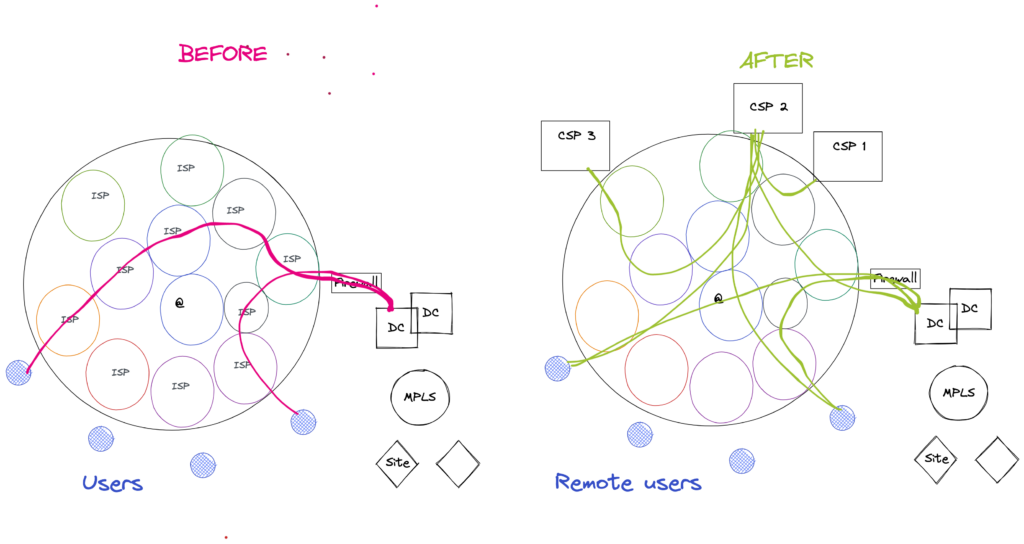

Cloud to cloud

The vast majority of enterprises run multi cloud environments; there are plenty of reasons for this: economics, decisions made by different organizational entities, application affinities to move to a certain cloud (e.g. migrating an application based on Microsoft technology like an Active Directory would tend direct people to move these workloads to Azure and the data hosted in Oracle database systems tend to be migrated to Oracle’s cloud to lessen the migration effort).

The use of the global span of a single cloud provider can also tend to add network latency between the application workloads. Here is why.

While organizations leverage multiple regions of a cloud infrastructure to limit the distance to the user, most of them find it a lot more difficult to distribute their inner systems like API servers, databases and other back end services.

This introduces a significant latency between front end and back end services, which in most cases is a new situation, and can have a significant impact on the responsiveness of the front end servers (who can wait for the back end to respond longer).

In addition, when you consider that these back end services can be either provided as PaaS instances (run by the cloud provider without control from the organization) or in micro services, you will understand that this added latency is rather hard to evaluate and anticipate as it can vary at any given point in time.

Finally, mostly in large organizations, multiple systems that got migrated in the cloud can end up in different environments, even different cloud providers or can be deployed in a different way (SaaS, PaaS, IaaS). This means that the latency between workloads will depend mostly on the different cloud service providers infrastructure and how they are interconnected.

This has become a common performance pitfall in multi cloud environments.

As a conclusion, the move to the cloud has reinforced the impact of networks and user experience and sometimes makes it less manageable and foreseeable than before.