In our previous article on “TLS’ impact on performance“, we specifically addressed how the TLS handshake process impacts digital services performance. We also explained some techniques you can use to minimize TLS’ performance impact. like using “False Start”, “Perfect Forward Secrecy” or using the latest TLS 1.3 version.

In this article, we continue our TLS journey by taking a look at more subtle drivers to optimize TLS performance. We especially address how certificates impact performance.

What’s the performance impact of a TLS authentication using using certificates?

In HTTPS communications, certificates allow both peers to validate their identity. When used in the browser, this authentication mechanism allows the client to verify that the server is who it claims to be. This helps prevent many client-centric web security threats. In addition, the server can also optionally verify the identity of the client (when using a proxy for example). How this authentication is processed with have a performance impact.

How does the server authentication work?

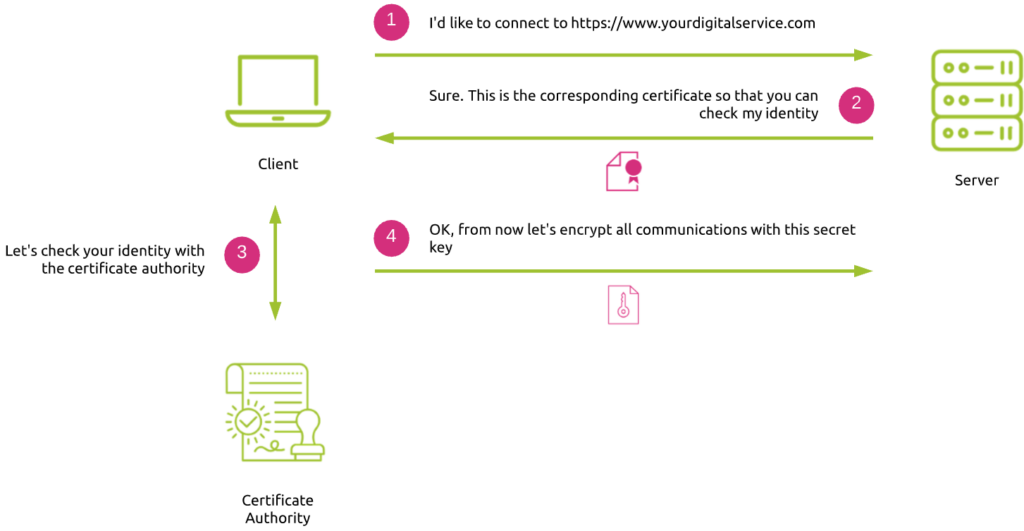

This is how a TLS handshake typically works:

- The client (browser) sends a request to the server, asking access to a digital service through HTTPS. In case the same server (so the same IP address) hosts different services corresponding to separate certificates, the client can specify the required service through an SSL extension called SNI (Server Name Identifier).

- The server acknowledges the request and delivers the corresponding service certificate to prove its identity.

- The client checks the server’s identity by requesting a CA (Certificate Authority) to validate its certificate.

- Once the client has validated the certificate, it sends a secret key to the server. Then both peers encrypt all subsequent communication flows. Of course, the client does not send the secret key in clear text. Instead, it is encrypted with the server’s public key contained in the certificate, so that the server is the only one able to decrypt this secret key by using its private key (PKI principle).

Validating the server’s identity requires additional steps. This explains why using certificates impact performance.

The “chain of trust” – example of kadiska.com

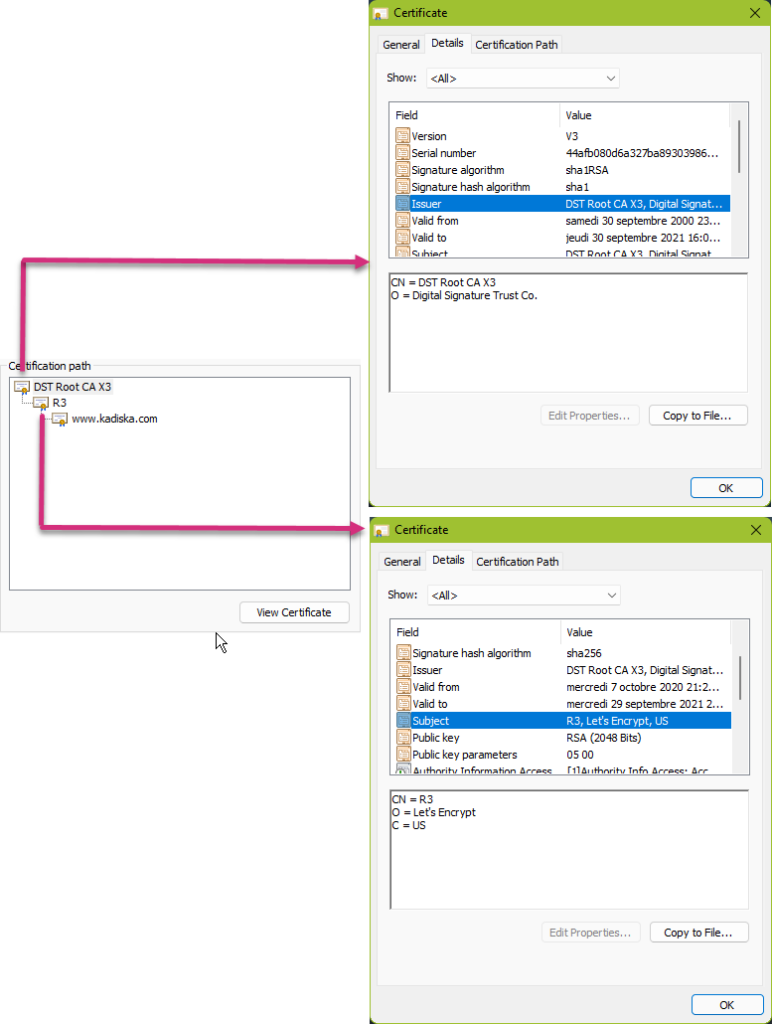

When the browser checks the server’s certificate authenticity, it does this by following what’s called the “chain of trust”. To explain this, let’s take the example of our Kadiska website.

When you browse to https://kadiska.com, our server provides your browser with its certificate:

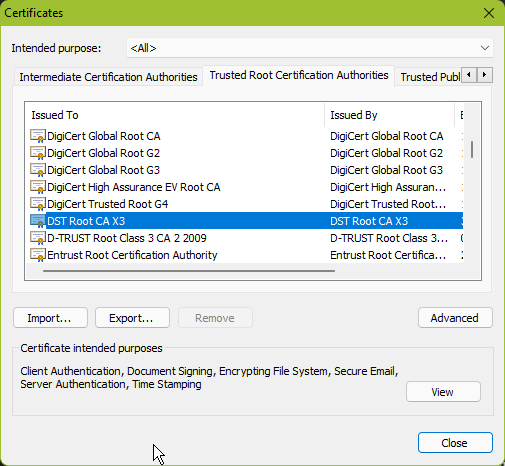

As you can see, our web server certificate has been signed by Let’s Encrypt, which in turn has been signed by Digital Signature Trust Co. Each browser contains by default a list of trusted CAs. In our case, Digital Signature Trust Co is already considered to be a trusted authority by the browser:

The chain of trust means that if a server’s certificate has been signed by an intermediate server A that in turn has been signed by a CA, it means you can trust the intermediate server A (the friend of my friend is also my friend, right?).

Everything looks great and easy. But unfortunately, one important question remains: “How can you be sure an authentic certificate is still valid today?” Perhaps the server’s private key has been compromised and the CA has revoked the corresponding certificate to prevent any security threat. The list of validated certificates in your browser will not magically be updated!

So, to understand the performance impact the process of checking a server’s certificate validity may have, let’s deep dive a bit in the certification revocation check process and the ways to optimize it.

How to check a certificate revocation?

There are different ways to check if a certificate has been revoked.

Certificate Revocation List (CRL)

As its name suggests, the CRL is a list of revoked certificates. It has been defined by RFC 5280. Each certificate authority maintains and periodically publishes a list of revoked certificate serial numbers. Anyone attempting to verify a certificate is then able to download the revocation list, cache it, and check the presence of a particular serial number within it. If it is present, then it has been revoked.

This way of checking certificates revocation is far from being optimal. The main limitations are:

- The CRL list only gets longer and longer. So fetching it will be more and more painful (more data to transfer on the network and store locally). So using TLS certificates in this case impacts performance.

- There is no mechanism to notify the browsers when the list is updated. So if the browser uses its local cache to check a certificate validity, you are never sure this certificate is still valid if the CRL has been recently updated.

- The need to fetch the latest CRL list from the CA may block certificate verification? This can add significant latency to the TLS handshake.

Online Certificate Status Protocol (OCSP)

OCSP has been defined in RFC 2560. It addresses most of the CRL limitations by allowing a real time check for status to a CA for a specific certificate.

Unfortunately, it also introduces new challenges.

From a security point of view, this OCSP process may impair the client’s privacy as the CA knows which sites the client is browsing.

But more importantly, this process can have a big performance impact. First, an OCSP request is performed through a new TCP connection. This implies additional RTT, thus delay, to establish this connection. Then the client has to wait for the CA’s response. While waiting for this process to be completed, the client freezes the TLS handshake process, adding delays. Even worse, a DNS lookup may be required in case the client does not have the CA FQDN (Fully Qualified Domain Name) in its cache. One more delay!

OCSP stapling

OCSP stapling has been defined in RFC 6066, under the “Certificate Status Request” TLS extension, as well as in RFC 5019.

The principle is quite simple. Instead of letting the client make the request to check the status of a certificate, the server makes this verification for the client. The certificate validation result is then “stapled” as part of the first TLS handshake message back to the client. The client simply needs to verify the stapled response to validate the certificate.

This solve all problems raised with the standard OCSP process (no client’s privacy concern, no impact on the performance).

Yeess! We solved all problems. Finally, TLS certificates do not impact performance… Hmmm, perhaps not.

The performance impact of TLS Record message size

The balance between overhead and latency

All application data delivered via TLS is transported within the “record” protocol. It defines a specific format that includes the data themselves (maximum 16 KB per chunk of data), and depending on the chosen cipher, anywhere from 20 to 40 bytes of overhead for the header, MAC (Message-Authentication Code), and optional padding (for block-based cyphers).

- From a server perspective, larger records means lower CPU and byte overhead due to record framing and MAC verification.

- From a network perspective though, larger records means larger TCP buffers. In case of network degradations (packet loss for example), it also mean additional latency. Indeed, all packets making a large record will have to be delivered and reassembled by the TCP layer before being processed by the TLS layer and delivered to the application.

As a conclusion, small records incur overhead, large records incur latency. There is no one value for the “optimal” record size. Instead, the best strategy is to dynamically adjust the record size.

Record size and TCP connection state

One of the best way to optimize the record size is to take the state of the TCP connection into account.

When a new TCP connection is established, the amount of packets that can be sent before the receiver must acknowledge good reception is determined by the initial TCP congestion window (CWND). This latter increases dynamically if no packet loss is detected, so that an “optimal” number of packets can be sent at once without acknowledgment, depending on the network conditions (available bandwidth and network quality). Reaching the congestion window implies waiting for an ACK, which adds RTT, thus latency!

So when the connection is new and TCP congestion window is low, or when the connection has been idle for some time, the record size should be small enough to fit exactly in one TCP segment. When the connection congestion window is large and if we are transferring a large stream (e.g., streaming video), the size of the TLS record can be increased to span multiple TCP packets to reduce framing and CPU overhead on the client and server.

Some servers implement dynamic record sizing to avoid congesting the CWDN. They increase the maximum record size once the session has been established. Others lets you specify the maximum record size you wish to allow.

Record size and TLS handshake

So, when a TCP connection is established, making sure the first TLS records do not exceed the initial CWND ensures best performance. So let’s do that! Well, even if it seems obvious, the reality can be different.

Remember the TLS handshake follows the TCP handshake. During the TLS handshake process, the server sends the certificate chain of trust back to the client. This chain of trust should avoid any gap. If one of the intermediate servers is not mentioned in the list, the browser will have to request it through an additional TCP connection. This will of course incur additional latency!

Let’s take a concrete example:

The average certificate chain depth is 2 to 3. The average certificate size is around 1 to 1.5KB. This size does not take the additional OCSP stapling response overhead into account…

Older systems may still have an initial CWND of 4 TCP segments… Running on an Ethernet infrastructure with an MTU of 1.500 bytes, you see how quick you may exceed this CWND by only sending the certificates chain of trust, generating additional RTT, so adding latency!

Most modern systems will support 10 TCP segments as initial CWND, but this is something to keep an eye on.

Takeways

Nowadays, you cannot afford deploying digital services without protecting your resources as well as the users that connect to them. TLS is the protocol of choice for that. But using things like certificates impact performance.

Modern systems can make sure the performance impact of TLS remains to a strict minimum. In addition to intrinsic hardware performance evolution, additional techniques help you boost the performance to an optimal result. There are some obvious ones, like using TLS 1.3 to reduce the number of RTT required, or adopting the False Start technique to send data as quick as possible. But some others may negatively impact performance by having non obvious side effects. Congesting the initial CWND is one of them.

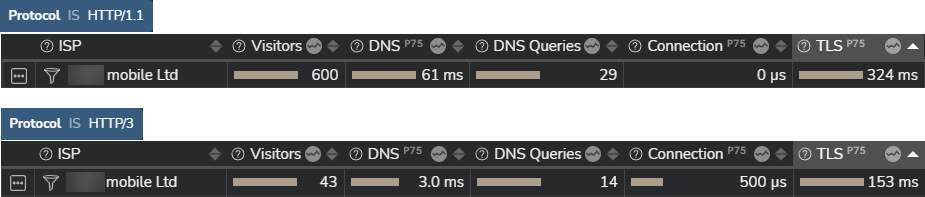

The first step towards minimizing the performance impact TLS has on your digital services is to measure it and correlate the values with parameters like the HTTP protocol used. The screenshot below shows an example of TLS performance to connect to a specific digital service. You can clearly see how adopting HTTP/3 (which requires the use of TLS 1.3) significantly improves the overall TLS performance compared to using HTTP/1.1. The TLS handshake process takes 153ms in HTTP/3 vs 324ms in HTTP/1.1.

To follow up on this article, I invite you to take a look at our capabilities in terms of real user monitoring here.