As this blog article explains, latency is the delay for a packet to travel on a network from one point to another. Different factors like processing, serialization and queuing, drive this latency. When using newly hardware and software capabilities, you can potentially reduce the impact these elements have on latency. But there is one thing you will never improve: the speed of light!

As Einstein outlined in his theory of special relativity, the speed of light is the maximum speed at which all energy, matter, and information can travel. With modern optical fiber, you can reach around 200.000.000 meters per second, the theoretical maximum speed of light (in a vacuum) being 299.792.458 meters per second. Not too bad!

Considering a communication between New York and Sydney, the latency is about 80ms. This value assumes a direct link between both cities, which will of course usually not be the case. Packets will traverse multiple hops, each one introducing additional routing, processing, queuing and transmission delays. You’ll probably end up with a latency between 100 and 150ms. Still pretty fast right?

Well, latency stays the performance bottleneck for most websites! Let’s see why.

The TCP/IP protocol stack

As of today, the TCP/IP protocol stack dominates the Internet. IP (Internet Protocol) is what provides the node-to-node routing and addressing, while TCP (Transmission Control Protocol), is what provides the abstraction of a reliable network running over an unreliable channel.

TCP and IP have been published respectively under RFC 791 and RFC 793, back in September 1981. Quite old protocols…

Even if new UDP-based protocols are emerging, like HTTP/3 discussed in one of our future articles, TCP is still in use today for most popular applications: World Wide Web, email, file transfers, and many others.

One could argue TCP cannot cope with performance requirements of today’s modern systems. Let’s explain why.

The three-way handshake

As stated before, TCP provides an effective abstraction of a reliable network running over an unreliable channel. The basic idea behind this is that TCP guarantees packet delivery. So it cares about retransmission of lost data, in-order delivery, congestion control and avoidance, data integrity, and more.

In order for all of this to work, TCP gives each packet a sequence number. For security reasons, the first packet does not correspond to the sequence number of 1. Each side of a TCP-based conversation (a TCP session) sends a randomly generated ISN (Initial Sequence Number) to the other side, providing the first packet number.

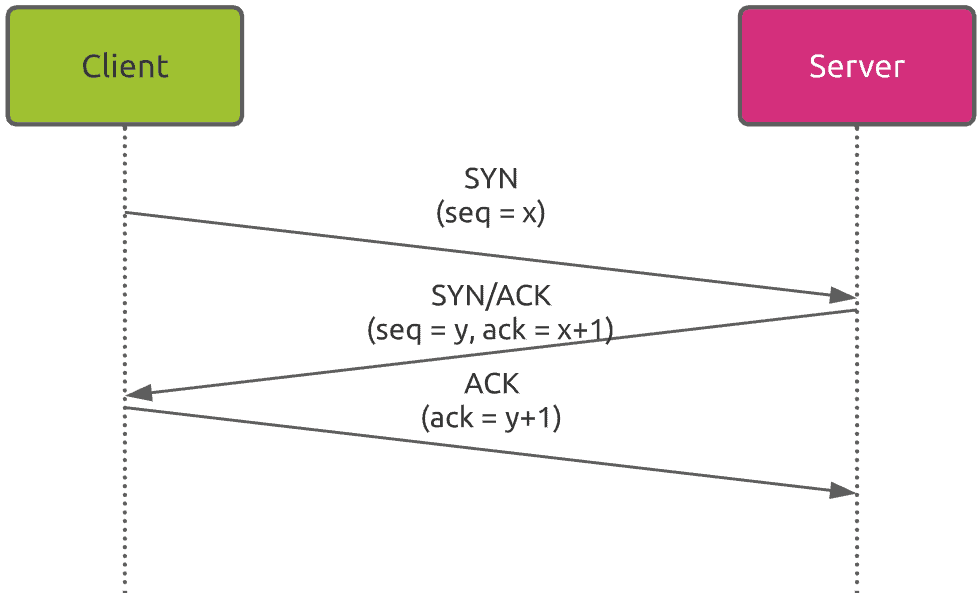

This information exchange occurs in what is called the TCP “three-way handshake”:[

- Step 1 (SYN): The client wants to establish a connection with the server, so it sends a packet (called a segment at TCP layer) with SYN (Synchronize Sequence Number) signal bit set, which informs the server that it intends to start communicating. This first segment includes the ISN (Initial Sequence Number).

- Step 2 (SYN/ACK): The server responds to the client’s request with SYN/ACK signal bits set. It provides the client with its own ISN and confirms the good reception of the first client’s segment (ACK).

- Step 3 (ACK): The client finally acknowledges the good reception of the server’s SYN/ACK segment.

At this stage, the TCP session is established.

The impact of TCP on total latency

Establishing a TCP session costs 1.5 round trips. So, taking the example of a communication between New York and Sydney, this introduces a setup delay typically between 450 and 600ms!

This is without taking secured communications (HTTPS through TLS) into consideration, which introduces additional round trips to negotiate security parameters. This part will be covered in a future article.

How to reduce the impact of latency on performance?

So how to reduce the impact of latency on performance if you cannot improve the transmission speed?

In fact, you can leverage two factors:

- The distance between the client and the server

- The number of packets to transmit through the network

There are different ways to reduce the distance between the client and the server. First, you can make use of Content Delivery Network (CDN) services to deliver resources closer to the users. Secondly, caching resources makes data available directly from the user’s device. No data at all to transfer through the network in this case.

In addition to reducing the distance between the client and the server, you can also reduce the number of packets to transmit on a network. One of the best examples is the use of compression techniques.

Nevertheless, the optimization you can achieve has some limits, because of how transmission protocols work… The TCP handshake process does require 1,5 round trips. The only solution to avoid this would be to replace TCP by another protocol, which is the trend we’ll certainly see in the future.